Another guest post by Dave Pollard…. and it’s a doozy.

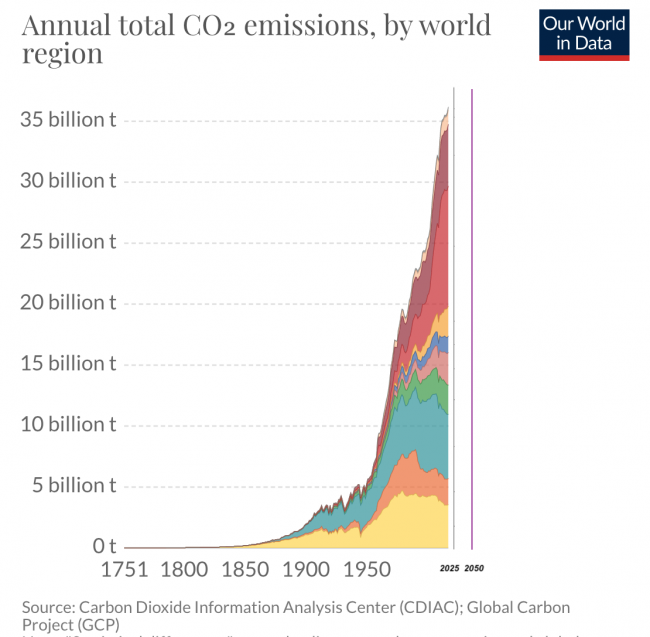

graph by Our World in Data

The latest IPCC report says that in order to prevent catastrophic climate change global net CO2 emissions will have to reach net zero by 2050, from their current levels of 33-38B tons rising by nearly 2%/year. The IPCC’s past reports have been almost laughably conservative and optimistic, which is just one of the reasons Extinction Rebellion have set a net-zero deadline of 2025, just 6 years from now.

It should be noted that total greenhouse gases will continue to rise for at least another 15-20 years after net zero CO2 is achieved, due to the ongoing run-on effects of other greenhouse gases, notably methane, that have been unleashed ‘naturally’ as a result of the damage we have already done to the atmosphere. And it is at best a long shot that even if we were to achieve net zero CO2 by 2025, it isn’t already too late to prevent climate collapse. Our knowledge of the science remains abysmal and every new report paints a bleaker picture. Expect a fierce anti-science, anti-reality backlash as more and more climate scientists concur that runaway, civilization-ending climate change is inevitable no matter what we do, or don’t do.

So what would be required to reduce the course of the hockey-stick trajectory shown in the chart above and achieve net zero CO2 in just 6 years, for a population that will at current rates be 7% (at least 1/2 billion people) greater than it is now?

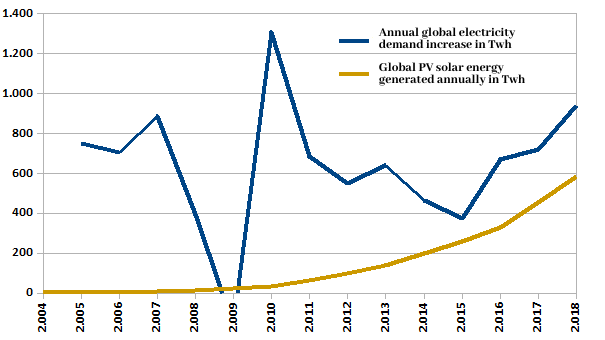

I think the reason that, while parliaments and political parties and scientists will readily accept XR’s first demand of proclaiming a climate emergency “and communicating the urgency for change”, for most the second demand of achieving net zero greenhouse gas emissions and biodiversity loss to zero by 2025 is simply absurd. Western economies have merely shifted production to Asia; their accelerating consumption of CO2-produced goods continues unabated. Our global economy depends utterly on cheap hydrocarbon energy. It’s completely preposterous to think a short-term shift is even vaguely possible. Renewables won’t help us; as the chart below shows, new solar energy isn’t even keeping up with the annual increases in demand, let alone cutting into the still-accelerating need for hydrocarbon energy:

graph by Pedro Prieto, cited by Bill Rees

So let’s be preposterous. What would have to happen, at a minimum, to achieve this valiant goal? Based on what I’ve read and on my understanding of complex systems, here’s just a few of the things that I think would have to happen:

- An immediate, complete and permanent grounding of all air traffic. That means no executive jets, no flying for diplomatic or business meetings or emergency family reasons — or military adventures. Achieving meaningful carbon reductions is simply impossible as long as planes are flying.

- Immediate rationing of liquid/gas hydrocarbons for essential and community purposes only. To get all the hydrocarbon-fuelled cars and trucks off the road in six years no more travel in personal hydrocarbon-burning vehicles could be permitted. And we’d have to work hard to convert all public buses, trains and ships to non-CO2 producing vehicles in that time. If you look at supply/demand curves for gasoline, we’d be looking at carbon taxes in the area of 1000% to ‘incent’ such conversions. My guess is that most shipping and much ‘privatized’ public transit would not be able to stay in business with these constraints. So say goodbye to most imported goods.

- All hydrocarbons in the ground would have to stay there, all over the world, effective immediately. We’d have to make do with existing reserves for a few years until everything had been converted to renewable resources.

- Industrial manufacturing based on fossil fuel use would have to convert in equal steps over the six year timeframe, and any plants failing to do so would have to be shuttered.

- Construction of new buildings and facilities would have to stop entirely. Existing buildings would have to phase out use of fossil fuels over the six years through rationing and cut-offs for non-compliance, and they would have to be remodelled to meet stringent net-zero energy standards and to accommodate all new building needs.

- Trillions of trees would have to be planted, and all forestry and forest clearing stopped entirely. Likewise, production of other new high-energy-use building materials (especially concrete) would have to cease. We’d have to quickly learn to re-use the wood and other building materials we have now.

- All this centralized, ‘unprofitable’ activity (and enforcement of the restrictions) would need to be funded through taxes. As during the great depression, the rich could expect tax rates north of 90% on income. And a very large wealth tax would be needed to quickly redistribute wealth so that the poor didn’t overwhelmingly suffer from the new restrictions.

- The consequences of the above would be an immediate and total collapse of stock and real estate markets and the flow of capital. The 90% of the world’s wealth that is purely financial and not real (stocks, bonds, pensions etc) would quickly become substantially worthless in a ‘negative-growth’ economy, adding a complete economic collapse to the crises the governments trying to administer the transition to net-zero were trying to manage. In such an economic collapse, many governments would simply fail, leaving communities in their jurisdictions to fend for themselves, and making it likely that much of the world would abandon the constraints of net-zero transition because they wouldn’t have the power or resources to even begin to enforce them.

Of course, none of this will happen. Even if governments had the power and wisdom to understand what was really required to make the net-zero transition, it would be political suicide for them to implement it. It won’t happen by 2025. It won’t happen by 2050. It won’t and wouldn’t happen by 2100 even if we had that long, which we do not.

The message of all this is that we cannot save our globalized civilization from the imminent end of stable climate, affordable energy, and the industrial economy — all of which are interdependent. No one (and no group) has the power to shift these massive global systems to a radically new trajectory, without which (and perhaps even with which) our world and its human civilization are soon going to look very different.

No one knows how and how quickly this will all play out, and the scenarios under which collapse will occur vary from humane, collaborative and relatively free from suffering, to the very dystopian. There is therefore no point dwelling on them, or even trying to plan for them. As always, we will continue to do our best, each of us, with the situation that presents itself each day, and our love for our planet and its wondrous diversity will play into that. Our best will not be enough, but we will do it anyway.